CES 2026: How Emerging Technology is Shaping the Year Ahead

An expert breakdown of CES 2026, exploring how robotics, intelligent devices, and next‑gen computing are shaping technology and professional workflows in 2026.

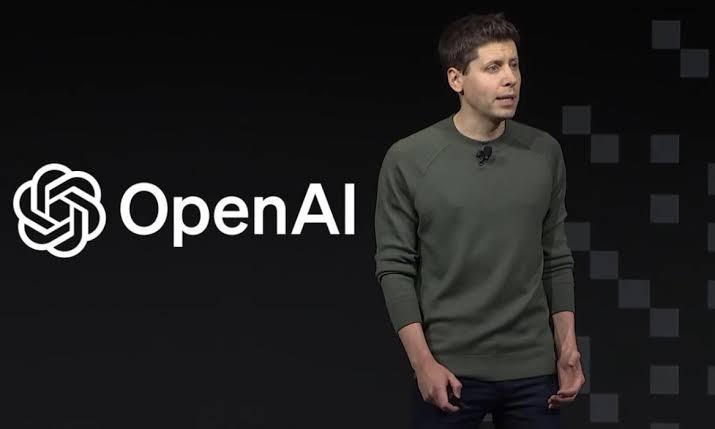

OpenAI has released two AI chatbot models as open-weight, marking a major move toward transparency. But the decision has stirred debate over the fine line between driving innovation and enabling misuse.

OpenAI Releases Open-Weight AI Chatbot Models — But Not Everyone Is Celebrating

In a move that has both thrilled researchers and alarmed ethicists, OpenAI has released two of its AI chatbot models with open weights, allowing developers and organizations to access, run, and fine-tune the models directly. While this step supports the global push toward transparency and innovation in artificial intelligence, it also raises pressing concerns about misuse, misinformation, and lack of guardrails.

What Are “Open-Weight” Models?

Unlike closed models (like GPT-4 or Claude), open-weight models allow anyone to download and deploy the model on their own infrastructure. This level of access supports:

OpenAI’s release follows similar moves by Meta (LLaMA), Mistral, and others—but given OpenAI’s leading role in generative AI, this release has broader symbolic and practical weight.

Why This Is a Big Deal

OpenAI has long been known for prioritizing safety and alignment before releasing powerful tools. So, opening the weights of its models marks a significant departure—especially considering the current climate of AI misuse in:

For researchers and developers, it’s a huge win for transparency and experimentation. But critics argue it could accelerate access to powerful tools for bad actors.

The Innovation vs. Misuse Debate

The release has ignited a fierce debate across AI communities:

Pro-Innovation Viewpoint:

Open-weight models democratize access, allow for meaningful benchmarking, and promote decentralized innovation. Closed models concentrate power among tech giants.

Risk & Misuse Perspective:

Without proper safeguards, open models can be fine-tuned for unethical uses, circumvent content restrictions, or be deployed in uncontrolled environments, leading to real-world harm.

This mirrors past tensions in tech: freedom vs. control, decentralization vs. responsibility.

How OpenAI Is Framing the Release

OpenAI has stated that these released models are not at the GPT-4 level, and are instead smaller models intended for responsible research and development. However, many experts believe the gesture signals a gradual shift toward more open practices, especially under pressure from competitors.

OpenAI has also committed to ongoing monitoring and community collaboration to address safety concerns.

What’s Next?

The open-weight release by OpenAI could have ripple effects across:

Whether this will lead to faster innovation or faster weaponization of AI tools remains to be seen.

Final Thoughts

OpenAI’s move marks a pivotal moment in the evolution of generative AI—a bold step toward openness that walks a tightrope between empowerment and exploitation. As more companies follow suit, the need for clear ethical frameworks and community standards will become even more critical.

Will this usher in a new era of open innovation—or open a Pandora’s box of AI misuse?

Let us know what you think in the comments.👇

An expert breakdown of CES 2026, exploring how robotics, intelligent devices, and next‑gen computing are shaping technology and professional workflows in 2026.

A thoughtful exploration of how modern AI tools are reshaping professional productivity in 2026, with practical insights, real world scenarios, and an honest look at limits and tradeoffs.

Looking to supercharge your productivity? Explore our comprehensive guide to the top 10 AI productivity tools offering free trials in 2026. From intelligent writing assistants to automated project management, discover which AI tools can save you hours every week. Get detailed comparisons, pricing breakdowns, and expert tips to choose the perfect tools for your workflow.